Hi! I'm a junior in ECE and I'm not quite sure what area I'm going to pursue but I have interests in power, optics, and also robotics. I rock climb in my free time (or I did before COVID) and like hiking as well.

Beep Boop

Collegeville, PA (Working from home though the CEI Lab is located at Cornell University, Ithaca NY)

Systems level design and implementing dynamic autonomous robots

Designing a fast autonomous car and exploring dynamic behaviors, acting forces, sensors, and reactive control on an embedded processor

Artemis Setup

The purpose of this lab was to get set up and familiar with the Arduino IDE and the Artemis board. After this lab, I was able to program your board, blink the LED, read/write serial messages over USB, display the output from the onboard temperature sensor, measure the loudest frequency recorded by the Pulse Density Microphone, and run the board using a battery instead of my computer.

I was able to successfully connect to the Artemis Nano module and run the Blink Example.

I ran the Example2_Serial script and confirmed that the serial port was working.

I also ran the analogRead example and could read out the voltage and temperature of the module.

The Example1_MicrophoneOutput script allowed me to read the loudest frequency on the serial port. I demonstrated by snapping my fingers (I sadly have the inability to whistle so I did that instead).

Lastly, I modified the microphone script in order to cause the LED to turn on when it recognized a loudest frequency value of above 4000.

Bluetooth Communication

Materials/Code Needed

1 x SparkFun RedBoard Artemis Nano

1 x USB A-C cable

1 x Bluetooth adapter

Distribution code

This lab involved testing the low-latency, moderate-throughput wireless communication between the Artemis board and a computer via Bluetooth LE.

The main files to be modified for this lab were ECE_4960_Robot.ino, which was the Arduino sketch to be uploaded, and main.py, which was the Python Bluetooth example.

I first plugged in my Artemis board with my USB-C cable and then set up the USB passthrough to my Ubuntu VM through the VirtualBox Extension Pack and also installed Bleak, a GATT client software capable of connceting to BLE devices.

After setting up the USB passthrough, I was able to download the distribution code and run the ECE_4960_Robot.ino file for with the Arduino IDE and then the main.py to try to discover my robot,

caching the address "66:77:88:23:BB:EF" to Settings["cached"] in settings.py. I noticed sometimes

the Bluetooth will fail to communicate, and in that case, I just turn off and turn back on Bluetooth under "Settings" in my VM, which

usually resolves the issue.

Here's a screenshot of the Serial Monitor of the Arduino side:

In the distribution code, command.h includes a 99-byte structure (cmd_t) with the first byte as the command type, the second as a length, and the rest as data.

Initially, to check my connection with the robot, I had to run await theRobot.ping() in the asynchronous function myRobotTasks()

I was able to successfully discover my robot after configuring the Bluetooth and the received output was a bunch of print-out statements confirming the ping (and pong) as well as the round trip latency. On average, the round-trip latency looked to be around 0.114 seconds.

Also, as shown in the screenshot and by what I observed by graphing the latencies for 60 pings, this seemed to be fairly consistent throughout all the pings, with most falling between 0.11 and 0.12 seconds.

This is definitely slower than our baud rate of 115200 symbols/sec.

Requesting a float

To request a float, on the Python script side, I first commented out await theRobot.ping() and instead used await theRobot.sendCommand(Commands.REQ_FlOAT).

In the Arduino sketch, I wrote the code shown below that the script jumps to when it receives the REQ_FLOAT case.

There's a data structure called res_cmd with three fields:

data (the data(float) to send),

command_type (in this case, it's GIVE_FLOAT which the Python side will recognize and then print the float),

and length (which is the length of the data).

I put in the values for the command type and length as described, and then used

memcpy(dest adr, src adr, size of data), a function suggested on Campuswire, to put in a float that starts at res_cmd->data

I was then able to use amdtpsSendData((uint8_t*)res_cmd, 6) to send the float, using 6 for the second field because

it's the size of a float (4 bytes), the length (1 byte), and the command type (1 byte).

Through the simpleHandler on the Python side, I was able to unpack the float I sent.

The main.py program recognized the command_type to be GIVE_FLOAT I sent

from the Arduino side, and unpacked the bytes as a little-endian float, shown below

The value displayed is not quite the value I sent (which was 3.1415), so I guess the accuracy only goes up to whatever

digit you sent.

The value displayed is not quite the value I sent (which was 3.1415), so I guess the accuracy only goes up to whatever

digit you sent.

Testing the Data Rate

Our last subject to cover is finding out what the round-trip latency was by streaming bytes from the Artemis to my computer.

In the main Python script, I found the code in my function myRobotTasks() with

await theRobot.testByteStream(25). Then in the Arduino sketch, I added in code for the case if (bytestream_active).

I sent an example of a 32 bit integer and a 64 bit integer, copying the data for the 64 bit integer to reside in the

address right after the 32 bit integer using memcpy(). In my case, since I wanted to

find the average time between packets sent, I had my 32 bit integer be the number of packets

the Arduino was sending and the 64 bit integer be the time lapsed between transmissions of packets, as shown below.

On the Python side, I first unpacked the byte array into two byte arrays using

On the Python side, I first unpacked the byte array into two byte arrays using first, second = unpack("4s8s",data), and then unpacked those again into an integer and a long

respectively. I then printed out the number of packets received, the time in microseconds between packets,

the time in seconds between packets, and the number of packets sent on the Arduino side.

This is shown in the figure.

(# Arduino packets, time in us, time in s, # Python packets)

I will note that one bug I ran into that confused me for a little while was that the amount of packets I counted

that were being sent from the Arduino side were significantly less than the packets being received over

Bluetooth. Obviously, this was very very wrong because packets don't just start multiplying and increasing in quantity

from the receiving side. I realized this was an issue with the threading in the Python script and how all of them

didn't completely end since I was rerunning the program back-to-back too quickly, meaning apparently all the threads didn't close,

and leading to me having about 8 main.py functions running at the same time (which is a bit of a yikes).

Luckily, this was resolved and can apparently be mitigated by just waiting a bit after ending the program instead of running it immediately after.

The average time was also about the same for a different amount of bytes (50 bytes) as shown below, with an average of 10.83 ms. I attempted this by doing 4 32 bit integers and 4 64 bit integers. I think the reason they're about the same is because you're still sending 99 bytes.

The big difference I think comes with the packet loss percentage. When sending 14 bytes, after 2000 packets sent, there were about 1585 received. This is a loss percentage of 20.75%. However, when I attempted to send 50 bytes, I got a loss percentage of 58.9% which is much higher than I expected. Therefore, if you wanted to transfer a large set of data this way, you would have to send it in small packets (as opposed to trying to stuff the 99 byte buffer with as much data as possible). This might take awhile (a second or two), but it would give you more reliable data.

Characterizing the car

Characterizing The Car:

The goal of this part of the lab was to document the car in any way that I thought might be useful later on. Here are some useful measurements/observations:

Dimensions and Basic Stats:

Testing the Car's Capabilities:

Manual Control:

Tricks:

(Yikes, my robot is better at parallel parking than me)

(Breaking and Entering)

Running the Virtual Robot

I installed the software dependencies and setup the base code in my VM.

I then was able to run lab3-manager, pressing a and s

to start the simulator, allowing me to run tests on my robot.

Additionally, I ran robot-keyboard-teleop to control the virtual robot through

keyboard commands.

The robot can go to very low linear and angular speeds (to where it's basically not moving), as well as very high linear and angular speeds (as shown below). This is due to the robot being a simulation, meaning it can change it's angular and linear speed/direction practically instantaneous, currently without the factors of friction, skidding, etc.

Robot moving in the simulation (Gotta go fast)

From my few tests on the virtual robot, I could see that when the robot bumps into an obstacle, a warning sign will pop up and the simulation will pause, meaning you then have to manually drag your robot back to a non-obstacle occupied place.

Motor driver & open loop control

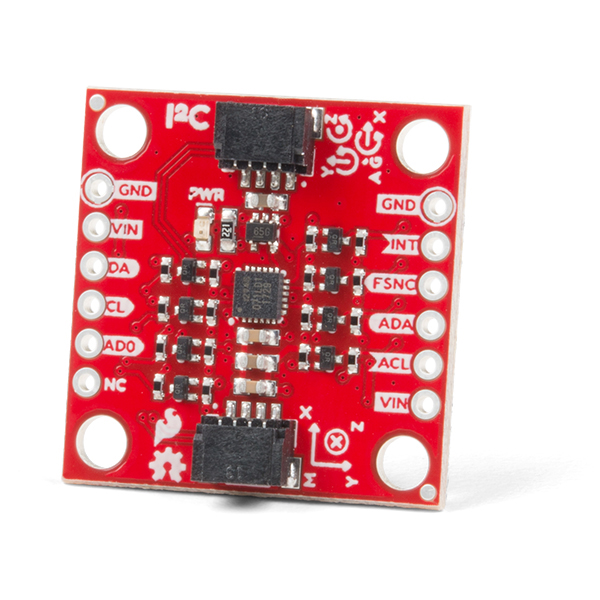

The purpose of the lab was to change from manual to open loop control of the car. At the end of the lab, my car was able to execute a pre-programmed series of moves using the Artemis board and the motor driver over I2C.

Parts Required:

Lab 4a:

I first hooked up the Artemis and the motor driver using a Qwiic connector. I then ran Example_wire and found the I2C address to be 0x5D (93).

I then took the car apart, unscrewing and removing the shell/bumpers from the car. I also removed the battery and took out the control PCB, cutting its power lines. I hooked up the power wires to the SCMD instead noting where the VIN on the SCMD marks the location of the positive terminal.

After that, I cut the connector headers from the motor wires and instead hooked them up to the terminals on the board instead. I mounted the SCMD in the place of the original control PCB and then used the Qwiic connector to hook up the Artemis board with the motor driver. Note: since the Qwiic connector was too big to route through the hole in the chassis, I just drilled a bigger hole there so I could feed the wires through.

Additionally, I added a red jumper between the PSWC pins on the Artemis board so I could easily switch on and off the board (meaning I didn't have to yank out the battery connector everytime)

Using the Arduino library manager, I installed the SCMD (Serial Controlled Motor Driver) library. I was then able to make the robot

follow a straight line, noticing that the right motor is stronger than the other. Therefore, for the left motor to go forwards

(motorNum = 0 and direction = 1), I had to

compensate for the imbalance with myMotorDriver.setDrive(0, 1, 118) to drive the left motor. For the right motor to go forwards

(motorNum = 1, direction = 1),

I did myMotorDriver.setDrive(1, 1, 100).

I then tested the lower limit for which each motor still turns.

For the left motor, this seemed to be around myMotorDriver.setDrive(0, 1, 68), while for the right motor

it seemed to be around myMotorDriver.setDrive(1, 1, 50)

Technically, the motors are able to start at lower values, but I have to give the wheels a little help (a nudge to start) so I only counted the threshold where the wheels could fully turn without any assistance at the beginning from me. Additionally, I only counted the threshold where the car could actually move forwards. The wheels could spin at a lower threshold with the car on its back but I thought this wouldn't be as important since the robot couldn't actually go forwards with the inhibitions of friction and the weight of the car.

Lastly, I just used open loop control of the robot to drive it around and make it spin around its own axis.

Lab 4b: Open Loop Control

Setting up the Base Code and Starting the Simulator

I setup the base code by extracting the lab 4 base code in VM and typing ./setup.sh in my terminal,

successfully compiling the lab

To start the lab4-manager, I opened a terminal window and typed in lab4-manager

I then launched the simulator by pressing "a" and then "s" on my keyboard.

Jupyter Lab Code

We were introduced to Jupyter Lab to write our Python code. To start a Jupyter server, I did the following:

/home/artemis/catkin_ws/src/lab4/scripts.jupyter labI was then able to work with the Robot Class which provides a control interface for the robot in the simulator and performs the following operations: getting the robot odometry pose, moving the robot, and getting range finder data.

In order to have my robot move in a rectangular loop, I first imported the necessary modules for my Python script and then instantiated/initialized an object of class Robot. I then set the linear velocity to a value and the angular velocity to 0, let this run for a length of time, and then set the linear velocity to 0 and the angular velocity to a value, letting this run for a length of time. By tuning my values, I could make the angles 90 degrees and modify the size of my rectangle by changing the amount of time I let the robot run with a non-zero linear velocity.

To set the lengths of time, I used the time.sleep() function and to make 90 degree turns, I had the angular velocity be set to 2 for 1 second.

My code and demonstration are shown below:

Prox, TOF, Obstacle avoidance

The purpose of the lab was to enable the robot to perform obstacle avoidance by putting distance sensors on the car, getting them working, and then attempting fast motion around the room without crashing into obstacles.

Parts Required:

Lab 5a: Obstacle Avoidance

Prelab:

I read over the datasheet and manual for the proximity board and found the device address to be 0x60. I also read over the documentation for the ToF breakout baord, which has an I2C address of 0x52.

The Proximity Sensor Breakout can read from 0 cm (the face of the sensor) to about 20 cm away. However, it doesn't have a wide angular range. It's also not very accurate for detecting quantitative distance readings but it is good at seeing if objects appear in front of it. I think ideally we would walk to put it on the front of the car to ensure we are not bumping into objects in front of the robot, but they'll be scenarios where the robot will bump into things on the side since it basically lacks peripheral vision or sensors. Additionally, just having that one sensor in the front would obviously mean the robot would not be able to see behind itself and would therefore run into any items behind it. Also, since the range is only 20 mm, there might be situations where the robot is close to an object but does not detect it due to inaccuracies in the sensor or being just over 20 mm away, meaning the robot would not be able to react/stop in time.

The ToF breakout board has a FoV from 15-27 degrees so it's basically just for looking straight forwards as well. It has a max distance of either 136, 290, or 360 cm in the dark but this distance is less in ambient light (where it's more sensitive).

It will also have the same narrow FoV problems as the proximity sensor. However, it is much better at quantitative measurement as opposed to the proximity sensor, and is less sensitive to color and texture. Putting it in the front of the car is the best approach since the car is primarily driving forwards and then turning when it sees obstacles. The car would miss seeing obstacles similar to the proximity sensor, due to the sensor's position in the front, but it would have less problems with range since the range is 1.3m as opposed to 20 mm.

There are a multitude of distance sensors based on IR transmission.

Proximity Sensor

Using the Arduino library manager, I installed the SparkFun VCNL4040 Proximity Sensor Library and hooked up the sensor. I scanned the I2C channel to find the sensor at 0x60, which matches with the datasheet. I could then test the sensor using the Example4_AllReadings script.

Here is a graph of the proximity value readings vs distance for 3 different colored objects in a well lit room. One object was a square white box, another was a pack of yellow sticky notes, and the last was my black notebook. I was able to see that the different colors did indeed impact the proximity value. The darker the color, the lower the value. This is because the IR is likely being absorbed more by darker materials as opposed to being reflected as for light materials, meaning the reading is lower.

All of them show a drastically higher level at a closer distance as opposed to a distance further away. I think this indicates that the proximity sensor is best used for objects/obstacles that emerge very close to the robot, as it has a higher sensitivity to that range. This is further emphasized by how I graphed them using a log scale.

I then tested the white box in a different ambient light environment (I turned the lights off and test it in the dark). The values were very close and basically indistinguishable from one another meaning ambient light levels shouldn't have a major impact on the proximity value.

I also tested different types of textures. When I tested a black matte surface vs a black reflective surface, I saw higher values for the reflective surface because similar to the white and yellow objects previously, some of the reflective surface reflected more of the IR rays as opposed to the phone case where the light was mostly absorbed. This is a downside to the proximity sensor as it depends on the target's reflectivity.

Lastly, I looked at the ambient light and white level data. The ambient light was lower the closer the object was to the sensor, as when it was closer it blocked more of the ambient light to hit the sensor. Additionally, the black object had the lowest ambient light and white levels due to the same reasons stated previously. I am also assuming the shadows that resulted from when the object was very close to the proximity sensor resulted in the lower white levels for the smaller distances. I can therefore conclude that the sensor is sensitive to ambient light, though the proximity values seem to not be affected by this ambient light.

It takes about 630 us each for each of the getWhite(), getAmbient(), and getProximity() functions, which is a fairly high rate. I found it as follows:

unsigned long time0 = micros();

unsigned int proxValue = proximitySensor.getProximity();

unsigned long measTime = micros() - time0;

Serial.println(measTime);

However, I noticed that it takes about 4 seconds for the data to stabilize after a sudden change. The sensor readings can be a bit jumpy, so it's definitely better for qualitative as opposed to quantitative measurements.

Time of Flight Sensor

Using the Arduino library manager, I installed the SparkFun VL53L1X 4m laser distance sensor library, hooking up the sensor to the Artemis board using a QWIIC connector. I scanned the I2C channel to find the sensor at 0x60, which matches with the datasheet. I could then test the sensor using the Example1_ReadDistance script, after first running the Calibration script with the gray target we were given with our kit. This calibration code didn't work as expected(?), so I added in startRanging and stopRanging() which then allowed me to run the code correctly.

The timing budget has a min and max of 20ms and 1000ms respectively. If we wanted to collect the most amount of data and run the robot in Short Distance mode, we would want to set the timing budget to be 20 ms. This also takes into account power consumption, and how we don't always want the sensor to constantly be collecting data. The repeatibility error is higher at lower timing budgets do I set the timing budget to be a little higher than 20 ms, as 30 ms.

We also consider the max speed we know the robot can go at in order to determine the measurement period (the time between when measurements start and end, when the sensor is in low power mode). The robot has a max speed of around 8 ft/s and I think the next part of the lab involves us trying to make it go as fast as possible. I guess to ensure the robot won't collide with the wall or any objects when traveling quickly, I'm estimating that we want to at least check the robot's position every 4 inches. Doing t = d/v, I then got a value of around 37.5 ms.

Because of this, I know that the measurement period should be around 37.5 - 30 (assuming the timing budget is 30 ms). I then set my value to 5, knowing that any value below 30 would mean the robot would immediately start ranging again after it completed.

I set these values using:

distanceSensor.setTimingBudgetInMs(30);distanceSensor.setIntermeasurementPeriod(5);To optimize the ranging performance, I decided to use the short distance mode since the room I'm running the robot in is not the

largest. Additionally, the range in short distance mode is about 1.3 meters (4.25 feet) which seems to be an adequate distance to start stopping a robot

going at 8 ft/s, knowing that the coasting distance (from Lab 3) was around 5 feet, and that I can cut this distance down by implementing

reverse motor controls (though not too much because then the car will flip). I set this mode using distanceSensor.setDistanceModeShort();.

It appears the most common errors are a signal fail or a wrapped target fail, which seem to be due to shaking or sudden movements that the sensor sees. Since it is sensitive to these sudden movements, I can use these failure mode indications to ignore the readings that result during those errors.

Lastly, I documented the ToF sensor range and accuracy. I had my test setup as shown below, running the sensor in Short Mode since that's the mode I'm using for obstacle avoidance:

My data is as shown below:

I can conclude that the sensor has good repeatability as I ran it three times on 3 different days at roughly the same ambient light (running in the same room with the same lights on), resulting in basically identical graphs. I did 100 measurements at each distance point (which was every 100 mm) and found the mean and SD at each. The ToF SD's were minimal and the ToF means were extremely close to the actual distance all the way up to around 1250 mm, before the mean started deviating from the actual distance (increasing at a slower rate than it's original linear pace) and the SD drastically increased as well. I can then conclude that the sensor is extremely accurate within at least a 1250 mm range.

I also tested in a pitch black room as opposed to my normal well lit room as shown in the graph, and I could see that the results were a little bit closer to the actual distances for the distances further away than 1250 mm. I think this is due to the sensor being in Short Distance mode as well as ambient light starting to interfere at further distances in lighter conditions, and not so much in dark conditions where it has slighter better performance. I also tested with different colors and textures with the results being virtually identical (as opposed to the proximity sensor), meaning this sensor is more insensitive to those aspects of objects.

In terms of the timing measurements, which I measured using the micros() function to time certain functions:

Obstacle Avoidance

I first mounted the sensors, Li-Ion battery, and Artemis board firmly to the car using the double-side tape from my kit and a bit of cardboard for the proximity and ToF sensors at the front. I daisy-chained all the sensors, the motor driver, and the Artemis board using QWIIC connectors, and I also made a cardboard bumper so I would have a smooth surface to mount the sensors on, angled slightly upwards to it wouldn't accidentally detect the floor.

I incrementally tested the vehicle, starting out with a speed of around 80 on both motors. I could then figure out the approximate distance needed as the threshold for the car to stop and turn, which I found to be about 500 mm. I basically kept increasingly bringing up the linear speed, while also changing the values of time and speed that I spent either braking, coast, or turning to compensate for the increase in speed before I reached a point where the robot was bumping into objects more. Also, I was able to use the rangeStatus function to check if a sensor measurement was valid though I did not end up using the proximity sensor. When I added it into my program, it didn't seem to add any less collisions and in fact almost seemed to make the performance worse since it appears to be less accurate and reliable. Therefore, my code just involves fine-tuning the ToF sensor.

My robot travels forward basically whenever the distance measurement is greater than the threshold, varies from 500 to 800 depending on if the robot just turned or whether it had first sensed an obstacle since this strategy led to better performance by minimizing the robot not turning enough and colliding straight into an object. If it sees an obstacle, it brakes, coasts, and then turns.

Through this process, I ended up with the following code:

#include "ComponentObject.h"

#include "RangeSensor.h"

#include "SparkFun_VL53L1X.h"

#include "vl53l1x_class.h"

#include "vl53l1_error_codes."

#include "Wire.h"

#include "Arduino.h"

#include "stdint.h"

#include "SCMD.h"

#include "SCMD_config.h" //Contains #defines for common SCMD register names and values

#include "SparkFun_VCNL4040_Arduino_Library.h"

//Optional interrupt and shutdown pins.

#define SHUTDOWN_PIN 2

#define INTERRUPT_PIN 3

#define LEDPIN 13

SFEVL53L1X distanceSensor;

SCMD myMotorDriver;

#define left 0

#define right 1

void setup(void)

{

Serial.begin(115200);

Wire.begin();

pinMode(LEDPIN, OUTPUT);

//************************************************************************

//Connect to all sensors and motor driver

if (distanceSensor.begin() != 0) //Begin returns 0 on a good init

{

Serial.println("Sensor failed to begin. Please check wiring. Freezing...");

while (1);

}

// Serial.println("Sensor online!");

myMotorDriver.settings.commInterface = I2C_MODE;

myMotorDriver.settings.I2CAddress = 0x5D; //config pattern is "1000" (default) on board for address 0x5D

myMotorDriver.settings.chipSelectPin = 10;

//*****initialize the driver get wait for idle*****//

while ( myMotorDriver.begin() != 0xA9 ) //Wait until a valid ID word is returned

{

Serial.println( "ID mismatch, trying again" );

delay(500);

}

Serial.println( "ID matches 0xA9" );

// Check to make sure the driver is done looking for slaves before beginning

Serial.print("Waiting for enumeration...");

while ( myMotorDriver.ready() == false );

while ( myMotorDriver.busy() ); //Waits until the SCMD is available.

myMotorDriver.enable();

//****************************************************************************

// Distance sensor

distanceSensor.setDistanceModeShort();

distanceSensor.setTimingBudgetInMs(30);

distanceSensor.setIntermeasurementPeriod(5);

}

int threshold = 500;

void loop(void)

{

distanceSensor.startRanging();

while (!distanceSensor.checkForDataReady()) {delay(1);}

int distance = distanceSensor.getDistance() + 21; //Get the result of the measurement from the sensor + offset

byte rangeStatus = distanceSensor.getRangeStatus();

if(rangeStatus==0){ // if no sensor errors occur (sigma, signal, etc)

if(distance = 0 || distance>threshold) { // onwards

myMotorDriver.setDrive(left, 1, 160);

myMotorDriver.setDrive(right, 1, 148);

delay(10);

threshold = 500;

}

else {

digitalWrite( LEDPIN, 1 );

myMotorDriver.setDrive(left, 0, 250); //brake

myMotorDriver.setDrive(right, 0, 250);

delay(100);

myMotorDriver.setDrive(left, 0, 0); //coast

myMotorDriver.setDrive(right, 0, 0);

delay(50);

myMotorDriver.setDrive(left, 1, 150); //turn

myMotorDriver.setDrive(right, 0, 150);

delay(40);

threshold = 800;

}

}

}

I was able to make the robot follow a straight line as well and stop before hitting the wall. I could do this reliably at a speed of around 4.1 ft/s, found since the green line is 6ft and each run took about 1.57 s. Above that speed, I was able to get the car to follow the straight line and stop, but it was not so great in terms of avoiding obstacles in general.

I created functions for going forwards, turning, going backwards, etc. and also tuned the distances and times based on the speed the robot was going at. This way, braking in time was more optimized and while it may take a little time to turn, I think the accuracy is a big improvement from before.

#include

#include

#include "SCMD.h"

#include "SCMD_config.h" //Contains #defines for common SCMD register names and values

#include "Wire.h"

#include

#include

#include

#include

#include

#include

#include "SparkFun_VL53L1X.h" //Click here to get the library: http://librarymanager/All#SparkFun_VL53L1X

//Optional interrupt and shutdown pins.

#define SHUTDOWN_PIN 2

#define INTERRUPT_PIN 3

#define LEDPIN 13

const float _adjustPercent= 1.0630;

const int _pwrLevel = 255;

const int _minObjDistance = 400;

const int _forwardCounterRatio = 6;

const int _turnTime = 1;

const int _coastingTime = 50;

const int _backwardTime = 50;

SFEVL53L1X distanceSensor;

SCMD myMotorDriver; //This creates the main object of one motor driver and connected slaves.

void setup()

{

Wire.begin();

Serial.begin(115200);

Serial.println("VL53L1X Qwiic Test");

if (distanceSensor.begin() != 0) //Begin returns 0 on a good init

{

Serial.println("Sensor failed to begin. Please check wiring. Freezing...");

while (1)

;

}

Serial.println("Sensor online!");

pinMode(LEDPIN, OUTPUT);

Serial.println("Starting sketch.");

//***** Configure the Motor Driver's Settings *****//

// .commInter face can be I2C_MODE or SPI_MODE

myMotorDriver.settings.commInterface = I2C_MODE;

//myMotorDriver.settings.commInterface = SPI_MODE;

// set address if I2C configuration selected with the config jumpers

myMotorDriver.settings.I2CAddress = 0x5D; //config pattern "0101" on board for address 0x5A

// set chip select if SPI selected with the config jumpers

myMotorDriver.settings.chipSelectPin = 10;

//*****initialize the driver get wait for idle*****//

while ( myMotorDriver.begin() != 0xA9 ) //Wait until a valid ID word is returned

{

Serial.println( "ID mismatch, trying again" );

delay(500);

}

Serial.println( "ID matches 0xA9" );

// Check to make sure the driver is done looking for slaves before beginning

Serial.print("Waiting for enumeration...");

while ( myMotorDriver.ready() == false );

Serial.println("Done.");

Serial.println();

//*****Set application settings and enable driver*****//

while ( myMotorDriver.busy() );

myMotorDriver.enable();

Serial.println();

distanceSensor.setDistanceModeShort();

distanceSensor.setTimingBudgetInMs(20);

distanceSensor.setIntermeasurementPeriod(20);

}

void loop()

{

static bool back;

static long fowardCounter;

float speedAdjuster = fowardCounter/_forwardCounterRatio;

if (speedAdjuster > 8 ) speedAdjuster = 8;

if (getDistance()> (_minObjDistance + speedAdjuster * 100) ){

back=false;

motorForward();

fowardCounter++;

}

else {

digitalWrite( LEDPIN, 1 );

if (!back){

motorCoasting(_coastingTime );

motorBackward(_backwardTime + speedAdjuster * 25 );

motorPivotRight(_turnTime);

back=true;

fowardCounter = 0;

}

// motorStop();

}

}

int getDistance(){

distanceSensor.startRanging(); //Write configuration bytes to initiate measurement

while (!distanceSensor.checkForDataReady())

{

delay(1);

}

int distance = distanceSensor.getDistance(); //Get the result of the measurement from the sensor

distanceSensor.clearInterrupt();

distanceSensor.stopRanging();

return distance;

}

void motorForward(){

myMotorDriver.setDrive( 0, 1, _pwrLevel); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 1, _pwrLevel*_adjustPercent);

delay(10);

}

void motorCoasting(int time){

myMotorDriver.setDrive( 0, 0, 0);

myMotorDriver.setDrive( 1, 0, 0);

delay(time);

}

void motorBackward(int time){

myMotorDriver.setDrive( 0, 0, _pwrLevel);

myMotorDriver.setDrive( 1, 0, _pwrLevel*_adjustPercent);

delay(time);

}

void motorPivotRight(int time){

myMotorDriver.setDrive( 0, 1, _pwrLevel*0.7); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 0, _pwrLevel*_adjustPercent*0.7);

delay(time);

}

void motorPivotLeft(int time){

myMotorDriver.setDrive( 0, 0, _pwrLevel*0.7); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 1, _pwrLevel*_adjustPercent*0.7);

delay(time);

}

void motorStop(){

myMotorDriver.setDrive( 0, 1, 0);

myMotorDriver.setDrive( 1, 1, 0);

delay(10);

}

Lab 5b: Obstacle Avoidance on My Virtual Robot

I downloaded and setup the lab5 base code in my VM like I had previously done in Lab 4. I then

started the simulator from the lab5-manager using lab5-manager, before I changed the directory to the lab5

work folder and started the Jupyter server in my VM (in the same way as Lab 4). I then opened the lab5.ipynb notebook to start the lab.

To do object avoidance for the virtual robot, I used data from the robot's range finder. In an infinite while loop, I first started off by having the robot turn whenever it saw a wall through a condition in a while loop. Basically, if the robot saw a distance less than or equal to 0.5, I made the robot stop and turn around its axis at a speed of 1 for 0.25 seconds. Else I set the velocity to 1 and kept the robot going forwards for 0.1 seconds.

After trying this out, I saw that I could fine tune this to let the robot be able to get closer to the wall without crashing. This just required some trial and error since the robot sometimes wasn't moving at a perfect perpendicular angle to a wall, meaning that since the laser distance finder on the virtual robot seemed to primarily point straight in front of it, I couldn't make the value too small or else the robot might sometimes not see the wall and crash. I had robot move at 90 degree angles to be less likely to approach a wall at a random angle and collide with it, especially when the robot is driving almost parallel to a wall. I also settled on alternating the angle so the robot could take a more random path (it kept going in a rectangle if I just had it be the same angle).

I played around with the durations for the times the robot went forwards or turned using the time.sleep() command which maintains the velocity designated before for the amount of seconds specified in the parameter to the sleep function. I made the robot turn about 90 degrees using the set_vel() function and also experimented with the linear speeds of the virtual robot in terms of trying to minimize collisions. You can make the robot go faster but then you have to make the distance in the while-statement a little larger to compensate for this. I settled on a linear speed of 0.7, and a distance threshold of 0.3, also making the robot back up a little before it turns.

There are situations after time where the robot will deviate from its 90 degree angles and increasingly become offset from the maze's grid, becoming more likely to run into obstacles since it starts to bounce into the wall at more random angles.

IMU

The purpose of the lab was to setup the IMU, mount it on the robot, and attempt to do PID control on the rotational speed.

Parts Required:

Lab 6a: IMU, PID, and Odometry

Prelab:

I installed SerialPlot to help visualize the data and read through the IMU Sparkfun documentation and datasheet. I also installed the PID Arduino library.

Setting up the IMU

I confirmed that the SparkFun 9DOF IMU Breakout-ICM 20948 Arduino library was installed and connected the IMU to the Artemis board using a QWIIC connector. After scanning the I2C channel, I found the sensor at address 0x69 which matches the datasheet. I ran the "\Examples\SparkFun 9DOF IMU Breakout-ICM 20948 Arduino library\Arduino\Example1_Basics" script and observed how the accelerometer had data for the x, y, and z orientations. The accelerometer z-axis measurement was always around 960 cm/s^2 due to gravity, as shown below when the IMU is at rest.

When I accelerated in a direction indicated in silkscreen on the IMU, there was an increase in value for the accelerometer measurement in that direction. Likewise, I saw that when I rotating around either the x, y, and z axes, there was a corresponding spike in gyroscope value for those relative axes as it measures the rate of angular change, before it stabilized back to 0.

Accelerometer

I first taped the accelerometer to a box so I could easily rotate/move it accurately:

I found the pitch and roll as shown below:

float pitch_a = atan2(myICM.accX(), myICM.accZ())*180/M_PI;

float roll_a = atan2(myICM.accY(), myICM.accZ())*180/M_PI;Using the average from 200 measurements each, I found the output at {-90,0,90} degrees pitch and roll:

I also recorded the output on the Plotter for those angles in terms of pitch and then in terms of roll.

The accelerometer is pretty accurate once you filter the output or have the IMU at rest (since it's fairly noisy). The angles of pitch and roll using the data from the accelerometer were very close to the actual values, with the angles changing from 0-90 or -90-0 at a conversion factor slightly above 1 (around 1.025 for both pitch and roll). I notice a little bit of instability when the roll is exactly -90 or 90 degrees (the tan calculation jumps around due to noise).

I wrote Python code using the tutorial on the lab page to plot the frequency response by doing a Fourier Transform. I received the data below for the frequency domain and I was a bit surprised to find that it is incredibly noisy. I had difficulty figuring out a cutoff frequency for my low pass filter based on this data and estimated that is was around 200 Hz. Knowing that the alpha value for the low pass filter was T/(T+RC) and that RC was around 33.3 and T was around 200 Hz, I then used trial and error to figure out the correct alpha value to be around 0.2. I noticed that the lower the value, the smoother the signal looked but the measurement did seem to lag a little bit more.

My code then ended up being as follows:

float alpha = 0.2;

pitch_a_LPF = alpha * pitch_a + ( 1 - alpha) * old_pitch_a;

roll_a_LPF = alpha * roll_a + ( 1 - alpha) * old_roll_a;

Here is the output when I just filter the pitch output with the low pass filter (and not the roll output):

Here is the output when I just filter the roll output with the low pass filter (and not the pitch output):

Gyroscope

I computed pitch, roll, and yaw angles from the gyroscope:

float dt = (millis()-last_time)/1000.;

last_time = millis();

pitch_g = pitch_g - myICM.gyrY()*dt;

roll_g = roll_g + myICM.gyrX()*dt;The gyroscope pitch/roll calculation is to accumulate the integral of angular acceleration over time to estimate the angle, which tends to drift away over time as shown below. The rolling angle from the accelerometer and gyroscope are about the same at the beginning, but start to drift away from each other over time. It looks like the drifting is worse in high sampling rates as opposed to lower sampling rates, with the drift becoming evident faster. Other than the drift though, the data looks quite accurate and responds faster than the accelerometer due to the filtering there.

I then filtered out the noise like with the accelerometer and got:

alpha = 0.02;

pitch = (1 - alpha)*(pitch - myICM.gyrY()*dt) + alpha * pitch_a_LPF;

roll = (1 - alpha)*(roll + myICM.gyrX()*dt) + alpha * roll_a_LPF;

See the diagram below (green - complementary filter, red - gyroscope, blue - accelerometer). It uses the short term gyroscope data, and compliments it with the long term accelerometer to prevent drift.

Magnetometer

I implemented finding yaw with tilt compensation:

float xm = myICM.magY()*cos(roll*M_PI/180) - myICM.magZ()*sin(roll*M_PI/180);

float ym = myICM.magX()*cos(pitch*M_PI/180)+ myICM.magY()*sin(roll*M_PI/180)*sin(pitch*M_PI/180) + myICM.magZ()*cos(roll*M_PI/180)*sin(pitch*M_PI/180);

float yaw = - atan2(ym, xm)*180/M_PI;

Here is the position of the IMU with a phone compass as a reference.

The compensated values (red) are more stable than the uncompensated values (blue) to small changes in pitch, as shown below

PID Control

1. Ramp up and Down Function

I first changed the IMU settings to have the maximum gyroscope resolution be 1000 dps instead of the default value of 250 dps.

ICM_20948_fss_t myFSS; // This uses a "Full Scale Settings" structure that can contain values for all configurable sensors

myFSS.g = dps1000; // (ICM_20948_GYRO_CONFIG_1_FS_SEL_e)

myICM.setFullScale( (ICM_20948_Internal_Gyr), myFSS );

if( myICM.status != ICM_20948_Stat_Ok){

Serial.print(F("setFullScale returned: "));

Serial.println(myICM.statusString());

}

I then set the motors to run in opposite directions and slowly increase the motor speed and then decrease it over 25 seconds. I graphed the motor speed vs. gyroscope reading using SerialPlot.

Here are the measurements and characteristics of the vehicle:

The red data is the motor output and the purple is for the gyroscope output:

2. Lowest possible speed at which you can turn:

I found the lowest speed to be around a motor power of 170, with a gyroscope average of around 280 degrees/sec.

Below 180, I got this graph for a motor power of 170 (with a single wheel turning and a gyroZ average of around 110):

Additonally, I tested the limit where both wheels stopped turning. This seemed to be around a motor power of 160 as shown below:

3. Accuracy of TOF ranging

We can achieve a stable rotation speed of 170 dps for the vehicle. The lowest sampling rate for the TOF sensor in short range mode is 20 ms. This means that the vehicle can rotate 3.6 degrees in one TOF measurement. At a 0.5m vertical distance from the wall, the distance will change from 50cm to 50cm/cos(3.6) = 50.1 cm in 1 TOF measurement. At 0.5m starting from a 45 degree angle from the wall, the distance will change from 50cm to 50cm/sin(48.6) - 50cm/sin(45) = 2.1cm. I think given the two parameters signal and sigma, we might get a reliable rotational scan but ideally we would probably want the robot to travel a factor or two slower to achieve a more reilable rotational scan.

4. PID Control

I originally decided to go with PID control since I knew using only P control would lead to a steady-state error because that type of controller requires an error input to function. However, after trying to tune the values with PID, I saw that the rate of change of the error didn't seem to help with controlling the robot since the change in error rate didn't seem too significant. In fact, adding in the differential coefficient led to oscillations in the output and made the controller a lot more difficult to tune due to the noise of the sensor (since derivatives amplify HF signals more than LF signals). Additionally, when I added in Kd, I saw motor clamping that was hard to eliminate. Because of this, I went with PI control since it provides zero control error and seems to reach a more stable state.

I also have to note that a crappy USB-C cable is better suited for this lab since it has a thinner/softer cable that drags less when the vehicle is rotating with the cable connected.

I first wrote my functions for using PID control, so I could then focus the rest of my time on tuning the values.

void runPIDLoop(int setpoint, float KP, float KI, float KD){

static long startTime = millis();

static float prevFeedback = 0 ;

static float prevPIDOutput = 0 ;

static bool IntegralClamp = false;

if ( millis()-startTime < 10000 ){

float pidOutput=computePID(setpoint, KP, KI, KD, prevFeedback, IntegralClamp);

float motorStep = prevPIDOutput + pidOutput;

if (motorStep > 255) {

motorStep = 255;

IntegralClamp = true;

} else if (motorStep < 80) {

motorStep = 80;

IntegralClamp = true;

} else {

IntegralClamp = false;

}

turnClockwise(motorStep);

prevFeedback = printData(motorStep);

prevPIDOutput = pidOutput;

} else {

digitalWrite( LED_BUILTIN, LOW );

motorStop();

}

}

float computePID(int setPoint, float KP, float KI, float KD, float input, bool IntegralClamp){

static long prevTime = millis();

static float prevError =0;

static float cumError = 0;

static float rateError =0 ;

static float lastError = 0;

long currentTime = millis();

long elaspedTime = currentTime - prevTime;

float error = setPoint - input;

cumError += error * elaspedTime;

// Serial.print("input");

// Serial.print(input);

// Serial.print(", ");

rateError = (error - lastError) / elaspedTime;

float out = KP * error + KD * rateError;

if (!IntegralClamp ){

out += KI * cumError;

}

// float out = KP * error + KI * cumError + KD * rateError;

lastError = error;

prevTime = currentTime;

return out;

}To tune the PI controller, I leaned towards Heuristic Procedure 1 in the lecture notes. I set Kp to a small value, with KI as 0 at first. I then increased KP until I got overshoot or any oscillations, before decreasing it. This step took a decent amount of time to eliminate any oscillation, but after that I could move on to the integral coefficient. I slowly increased this from 0 until I saw overshoot, and then continued to run the robot until I had eliminated oscillations.

In particular, I first started with a gyroZ target of 200 dps and a Kp of 5, which was clearly too high:

I then proceeded to scale down Kp to 1 with a static error of around 50.

I lowered Kp to 0.5 and Ki to 0.1 which led to a very unstable system.

Knowing Ki was too high, I spent a lot of time trying to tune the parameters and I ended up with a gyroZ value of 170, Kp = 0.935 and Ki = 0.00255. As shown, this system looks stable.

I also have a motor power range limiter in runPIDLoop() since the motor is a nonlinear actuator with a limited power range, and we have to implement integral clamping to avoid an integral wind-up issue. This resulted in me putting clamps on anything below a motor power level of 80 or anything above a motor level of 255.

As shown, the robot can clearly reach lower speeds using PID control as compared to open loop control. I went from an open loop speed of 280 dps to a speed of around 170 dps, which is significantly lower. It is about slow enough to produce a rough rotational scan, though I think this scan might not be the most reliable given that this is still around half a full rotation in one second.

5. One Motor Only

I then used PID control on one motor only to see how slow I could make the robot spin. You can achieve much lower speeds now since, intuitively, you are only driving one motor as opposed to driving two in opposing directions (leading to more speed). It becomes easier to have the robot basically rotate around one of its wheels rather than around its center.

I found that a speed of 80 dps was the lowest gyroscope set point I could set. To prevent drifting from having the motor be set to 0, I set the right motor to a low value of 50 to let the motor aid the other one just enough to allow the robot to spin around a single wheel.

In terms of accuracy, at a speed of 80 dps and a ToF sampling rate of 40 ms, we get that the vehicle can rotate 3.2 degrees in one TOF measurement. If you were mapping a 2x2 m^2 box you would get a change from 200 cm to 200cm/cos(3.2) = 200.3 cm, which is very accurate. If you were trying to map a 4x4m^2 box, you would want to set the TOF to long distance mode as opposed to short mode, with a sampling time of around 100 ms. This would give you an accuracy of 8 degrees per measurement, meaning the distance will change from 400 cm to 404 cm, which is still faily accurate. There might be a bit of noise or inaccuracies in terms of the turning of the robot, so this may not actually end up this accurate, but plotting the TOF sensor readings should lead to a pretty reasonably accurate mapping of a room.

I didn't have enough time to plot by TOF sensor readings in a polar plot or implement PID control on my robot's forward velocity since this lab really took quite a lot of time to complete, but I guess I'll get to work with this in the future labs.

Lab 6b: Odometry - Virtual Robot

The purpose of this lab is to use the plotter tool and visualize the difference between the odometry (estimated use of data to estimate change in position) and ground truth pose (the actual position) estimates of the virtual robot.

I downloaded the lab6 based code and setup everything as before. I was also able to start the Plotter by starting the lab6-manager and pressing "b" and "s" as well as start the Simulator and keyboard teleop tool. I then started the Jupyter server in my work directory.

I plotted the odometry and ground truth data points using the Plotter tool and controlling the robot through the keyboard teleop tool. I found that it seemed reasonable to send data every 0.1 seconds and saw that the odometry and ground truth data points got further and further away as time went on due to error accumulation.

In particular, if I had the virtual robot remain stationary for a period of time and then started moving it around, the ground truth plot would start from the origin while the odometry graph would already have been accumulating distance in that time, leading to it starting at a different point and the whole plot being offset by this error. If I let the program run over a long period of time, this error became even more pronounced and at one point, the two points currently being graphed ended up being separated by half a graph's length apart, which is clearly very wrong. While the odometry trajectory does resemble that of the ground truth and includes the same general turns/distances, the error and noise in the readings result in inaccuracies that become more pronounced the furrther the program goes on. These inaccuracies seem worse in terms of the turning as opposed to just recording the distance traveled linearly.

In particular, with this video I made the robot go in a loop. In the ground truth graph, this appeared as it should. However, in the odometry graph, you can see the loop is not a perfect circle and instead becomes increasingly more imperfect. The noise also seems to differ with time/speed, becoming even worse when I tried running the robot at higher speeds since it looked like the error in terms of distance accumulated faster.

Odometry

Lab 7a: Grid Localization using Bayes Filter

Objective:

The lab was as a precursor to the next lab where we will be implementing grid localization using Bayes Filter.

I downloaded the lab7 distribution code, first running the pre-planned trajectory with the Trajectory class and seing that the odometry data became very offset as time went on. Additionally, the robot moved pretty slowly but seemed fairly consistent in its trajectory when I ran the program multiple times. While accurate now, we need some sort of localization or way of knowing where it is when the odometry data is offset, which is where the Bayes filter comes in.

I wrote some pseudocode/Python code for the functions needed in the Bayes Filter Algorithm.

# In world coordinates

def compute_control(cur_pose, prev_pose):

""" Given the current and previous odometry poses, this function extracts

the control information based on the odometry motion model.

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

Returns:

[delta_rot_1]: Rotation 1 (degrees)

[delta_trans]: Translation (meters)

[delta_rot_2]: Rotation 2 (degrees)

"""

x_delta = cur_pose[0] - prev_pose[0] # x of current pose - x of previous pose

y_delta = cur_pose[1] - prev_pose[1] # y of current pose - y of previous pose

delta_rot_1 = math.atan2(y_delta, x_delta) - prev_pose[2] # initial rotation

if delta_rot_1 > 180:

delta_rot_1 = delta_rot_1 - 360

if delta_rot_1 < 180:

delta_rot_1 = delta_rot_1 + 360

delta_trans = math.sqrt(x_delta**2 + y_delta**2) # translation

delta_rot_2 = cur_pose[2] - prev_pose[2] - delta_rot_1 # final rotation

if delta_rot_2 > 180:

delta_rot_2 = delta_rot_2 - 360

if delta_rot_2 < 180:

delta_rot_2 = delta_rot_2 + 360

return delta_rot_1, delta_trans, delta_rot_2

# In world coordinates

def odom_motion_model(cur_pose, prev_pose, u):

""" Odometry Motion Model

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

(rot1, trans, rot2) (float, float, float): A tuple with control data in the format

format (rot1, trans, rot2) with units (degrees, meters, degrees)

Returns:

prob [float]: Probability p(x'|x, u)

"""

(rot1, trans, rot2) = u

(pose_rot1, pose_trans, pose_rot2) = compute_control(cur_pose, prev_pose)

p1 = loc.gaussian(math.abs(rot1-pose_rot1), 0, loc.odom_rot_sigma**2) # rotation 1

p2 = loc.gaussian(math.abs(tran1-pose_trans), 0, loc.odom_trans_sigma**2) # trans

p3 = loc.gaussian(math.abs(rot2-pose_rot2), 0, loc.odom_rot_sigma**2) # rotation 2

prob = p1 * p2 * p3

return prob

def prediction_step(cur_odom, prev_odom, xt, u):

""" Prediction step of the Bayes Filter.

Update the probabilities in loc.bel_bar based on loc.bel from the previous time step and the odometry motion model.

Args:

cur_odom ([Pose]): Current Pose

prev_odom ([Pose]): Previous Pose

"""

(x,y,a) = xt # passing in current position as parameter

loc.bel_bar[x][y][a] = (0,0,0) # sum = 0 at first

for x1 in numpy.arange(-2,2.2,0.2): # all possible past positions

for y1 in numpy.range(-2,2.2,0.2):

for a1 in numpy.arange(-180,200,20):

prev_pose_param = (x1,y1,a1)

loc.bel_bar[x][y][a] += odom_motion_model(xt, prev_pose_paran, u) * loc.bel[x1][y1][a1] # add to sum for the xt

def sensor_model(obs, xt):

""" This is the equivalent of p(z|x).

Args:

obs ([ndarray]): A 1D array consisting of the measurements made in rotation loop

Returns:

[ndarray]: Returns a 1D array of size 18 (=loc.OBS_PER_CELL) with the likelihood of each individual measurements

"""

prob_array = [0] * 18

views = get_views(xt[0], xt[1], xt[2]) # true view

for k in range (0,18):

prob_array[index] = loc.gaussian(abs(obs[index] - views[index]), 0, loc.sensor_sigma**2)

return prob_array

def update_step(z, xt):

""" Update step of the Bayes Filter.

Update the probabilities in loc.bel based on loc.bel_bar and the sensor model.

"""

# update for state xt with measurement z

(x,y,a) = xt

loc.bel[x][y][a] = sensor_model(z, xt) * loc.bel_bar[x][y][a] * norm_constant

# assuming norm_constant is calculated in the loop and multiplied by all loc.bel values

Lab 7b: Mapping

I first prepared an area to be mapped out, which ended up being near my front door, putting down tape so I would recognize where my boundaries and obstacles were so I can recreate the setup whenever I need to. With this area, I could stand on my stairs at home and get a good top-down view of the map. To make it interesting, I added a couple of boxes as an obstacle in the middle and had a curve in the map from the beginning of the stairs, as well as making the course not symmetrical.

I then modified my PID code from the last lab in order to collect data from the robot when it's rotating in place at three points in the map (since there's a couple of blind spots if you only do one spot). There was a slight offset when I tried rotating twice so I had to tune my PID controller a little bit more, adding in the derivative term. After that, I think my controller had more accuracy.

Additionally, another thing to note is that I switched my turning from clockwise to counterclockwise (because I had to graph this in polar coordinate form). I also had to switch the range for the TOF sensor to be in long-distance mode as opposed to short-distance mode since it's a bigger space and we're going slower.

My code is below:

/******************************************************************************

#include

#include

#include "SCMD.h"

#include "SCMD_config.h" //Contains #defines for common SCMD register names and values

#include "Wire.h"

#include

#include

#include

#include

#include

#include

#include "SparkFun_VL53L1X.h" //Click here to get the library: http://librarymanager/All#SparkFun_VL53L1X

#include "ICM_20948.h"

#define WIRE_PORT Wire // Your desired Wire port. Used when "USE_SPI" is not defined

#define AD0_VAL 1

ICM_20948_I2C myICM;

//Optional interrupt and shutdown pins.

#define SHUTDOWN_PIN 2

#define INTERRUPT_PIN 3

#define LEDPIN 13

const float _adjustPercent= 1.0630;

const int _pwrLevel = 255;

const int _minObjDistance = 400;

const int _forwardCounterRatio = 6;

const int _turnTime = 1;

const int _coastingTime = 50;

const int _backwardTime = 50;

SFEVL53L1X distanceSensor;

//Uncomment the following line to use the optional shutdown and interrupt pins.

//SFEVL53L1X distanceSensor(Wire, SHUTDOWN_PIN, INTERRUPT_PIN);

SCMD myMotorDriver; //This creates the main object of one motor driver and connected slaves.

float myGyroZ = 0;

float myYaw = 0;

int rotateCount = 0;

float startingYaw_m = 0;

void setup()

{

Wire.begin();

Serial.begin(115200);

Serial.println("VL53L1X Qwiic Test");

if (distanceSensor.begin() != 0) //Begin returns 0 on a good init

{

Serial.println("Sensor failed to begin. Please check wiring. Freezing...");

while (1)

;

}

Serial.println("Sensor online!");

pinMode(LED_BUILTIN, OUTPUT);

Serial.println("Starting sketch.");

//***** Configure the Motor Driver's Settings *****//

// .commInter face can be I2C_MODE or SPI_MODE

myMotorDriver.settings.commInterface = I2C_MODE;

//myMotorDriver.settings.commInterface = SPI_MODE;

// set address if I2C configuration selected with the config jumpers

myMotorDriver.settings.I2CAddress = 0x5D; //config pattern "0101" on board for address 0x5A

// set chip select if SPI selected with the config jumpers

myMotorDriver.settings.chipSelectPin = 10;

//*****initialize the driver get wait for idle*****//

while ( myMotorDriver.begin() != 0xA9 ) //Wait until a valid ID word is returned

{

Serial.println( "ID mismatch, trying again" );

delay(500);

}

Serial.println( "ID matches 0xA9" );

// Check to make sure the driver is done looking for slaves before beginning

Serial.print("Waiting for enumeration...");

while ( myMotorDriver.ready() == false );

Serial.println("Done.");

Serial.println();

//*****Set application settings and enable driver*****//

while ( myMotorDriver.busy() );

myMotorDriver.enable();

Serial.println();

distanceSensor.setDistanceModeLong();

distanceSensor.setTimingBudgetInMs(20);

distanceSensor.setIntermeasurementPeriod(20);

WIRE_PORT.begin();

WIRE_PORT.setClock(400000);

bool initialized = false;

while( !initialized ){

myICM.begin( WIRE_PORT, AD0_VAL );

Serial.print( F("Initialization of the sensor returned: ") );

Serial.println( myICM.statusString() );

if( myICM.status != ICM_20948_Stat_Ok ){

Serial.println( "Trying again..." );

delay(500);

}else{

initialized = true;

}

}

ICM_20948_fss_t myFSS; // This uses a "Full Scale Settings" structure that can contain values for all configurable sensors

myFSS.g = dps1000; // (ICM_20948_GYRO_CONFIG_1_FS_SEL_e)

// dps250

// dps500

// dps1000

// dps2000

myICM.setFullScale( (ICM_20948_Internal_Gyr), myFSS );

if( myICM.status != ICM_20948_Stat_Ok){

Serial.print(F("setFullScale returned: "));

Serial.println(myICM.statusString());

}

}

void loop()

{

// motorCharacteristics();

// motorSteady(170);

// runPIDLoop(200, 0.5, 0.002, 0.1); // good value for GyroZ 200, now let's scale it down

runPIDLoop(170, 0.425, 0.0017, 0.085); // from scale down (0.85) of GyroZ 200

// runPIDLoop(150, 0.375, 0.0015, 0.075); // from scale down (0.75) of GyroZ 200

// runPIDLoop(130, 0.325, 0.0013, 0.065); // from scale down (0.65) of GyroZ 20

}

void runPIDLoop(int setpoint, float KP, float KI, float KD){

static long startTime = millis();

static float prevFeedback = 0 ;

static float prevPIDOutput = 0 ;

static bool IntegralClamp = false;

if( myICM.dataReady() ){

if ( startingYaw_m == 0 ){

myICM.getAGMT();

float yaw_m = atan2(myICM.magY(), myICM.magX())*180/M_PI;

if (yaw_m < 0.0 ) yaw_m = 360 + yaw_m;

startingYaw_m = yaw_m;

// Serial.println(startingYaw_m);

}

// if ( millis()-startTime < 10000 ){

if ( rotateCount < 1 ){

float pidOutput=computePID(setpoint, KP, KI, KD, prevFeedback, IntegralClamp);

float motorStep = prevPIDOutput + pidOutput;

if (motorStep > 255) {

motorStep = 255;

IntegralClamp = true;

} else if (motorStep < 80) {

motorStep = 80;

IntegralClamp = true;

} else {

IntegralClamp = false;

}

// turnClockwise(motorStep);

turnCounterClockwise(motorStep);

myICM.getAGMT();

printData(motorStep);

prevFeedback = myGyroZ ;

prevPIDOutput = pidOutput;

} else {

digitalWrite( LED_BUILTIN, LOW );

motorStop();

}

}

}

float computePID(int setPoint, float KP, float KI, float KD, float input, bool IntegralClamp){

static long prevTime = millis();

static float prevError =0;

static float cumError = 0;

static float rateError =0 ;

static float lastError = 0;

long currentTime = millis();

long elaspedTime = currentTime - prevTime;

float error = setPoint - abs(input);

cumError += error * elaspedTime;

// Serial.print("input");

// Serial.print(input);

// Serial.print(", ");

rateError = (error - lastError) / elaspedTime;

float out = KP * error + KD * rateError;

if (!IntegralClamp ){

out += KI * cumError;

}

// if ((error > 0 && out <0 ) || (error < 0 && out > 0 )) {

// out = 0;

// }

// float out = KP * error + KI * cumError + KD * rateError;

lastError = error;

prevTime = currentTime;

return out;

}

void motorSteady(int motorLevel){

static long startTime = millis();

if ( millis()-startTime < 10000 ){

turnClockwise(motorLevel);

printData(motorLevel);

} else {

digitalWrite( LED_BUILTIN, LOW );

motorStop();

}

}

void motorCharacteristics(){

static int motorLevel = 0;

static int increment = 1;

static long startTime = millis();

if ( millis()-startTime < 24000 ){

turnClockwise(motorLevel);

printData(motorLevel);

if (motorLevel == 255) increment = -1;

motorLevel += increment;

if (motorLevel == 0 ) increment = 0;

delay(10);

} else {

digitalWrite( LED_BUILTIN, LOW );

motorStop();

}

}

void turnClockwise(int motorLevel){

digitalWrite( LED_BUILTIN, HIGH );

myMotorDriver.setDrive( 0, 1, motorLevel);

myMotorDriver.setDrive( 1, 0, motorLevel);

delay(5);

}

void turnCounterClockwise(int motorLevel){

digitalWrite( LED_BUILTIN, HIGH );

myMotorDriver.setDrive( 0, 0, motorLevel);

myMotorDriver.setDrive( 1, 1, motorLevel);

delay(5);

}

void printData(int motorLevel){

static long last_time = 0;

static float prevYaw_m = 0;

float dist = getDistance();

//Gyroscope

Serial.print(motorLevel);

Serial.print(", ");

myGyroZ = myICM.gyrZ();

Serial.print(myGyroZ);

//yaw angle using gyroscope

float dt = (millis()-last_time)/1000.;

last_time = millis();

myYaw += + myGyroZ*dt;

if (abs(myYaw) > 360) {

myYaw = 0;

rotateCount++;

}

Serial.print(", ");

Serial.print(myYaw);

//magnetnometer

float yaw_m = atan2(myICM.magY(), myICM.magX())*180/M_PI;

yaw_m += 180;

// if (yaw_m < 0.0 ) {

// yaw_m = 360 + yaw_m;

// }

// if ( yaw_m < startingYaw_m && prevYaw_m > startingYaw_m ){

// rotateCount++;

// }

Serial.print(", ");

Serial.print(yaw_m);

// Serial.print(", ");

// Serial.print(prevYaw_m);

// Serial.print(", ");

// Serial.print(startingYaw_m);

Serial.print(", ");

Serial.print(dist);

Serial.println();

prevYaw_m = yaw_m;

}

int getDistance(){

distanceSensor.startRanging(); //Write configuration bytes to initiate measurement

while (!distanceSensor.checkForDataReady())

{

delay(1);

}

int distance = distanceSensor.getDistance(); //Get the result of the measurement from the sensor

distanceSensor.clearInterrupt();

distanceSensor.stopRanging();

return distance;

}

void motorForward(){

myMotorDriver.setDrive( 0, 1, _pwrLevel); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 1, _pwrLevel*_adjustPercent);

delay(10);

}

void motorCoasting(int time){

myMotorDriver.setDrive( 0, 0, 0);

myMotorDriver.setDrive( 1, 0, 0);

delay(time);

}

void motorBackward(int time){

myMotorDriver.setDrive( 0, 0, _pwrLevel);

myMotorDriver.setDrive( 1, 0, _pwrLevel*_adjustPercent);

delay(time);

}

void motorPivotRight(int time){

myMotorDriver.setDrive( 0, 1, _pwrLevel*0.7); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 0, _pwrLevel*_adjustPercent*0.7);

delay(time);

}

void motorPivotLeft(int time){

myMotorDriver.setDrive( 0, 0, _pwrLevel*0.7); //Drive motor i forward at full speed

myMotorDriver.setDrive( 1, 1, _pwrLevel*_adjustPercent*0.7);

delay(time);

}

void motorStop(){

myMotorDriver.setDrive( 0, 1, 0);

myMotorDriver.setDrive( 1, 1, 0);

delay(10);

}

In terms of my transformation matrices, I first based some values off of where I put the sensors on the car. I put the IMU about 6 cm above the car (to avoid interference from the magnetics of the motors) and also at the center of the car, facing straightforwards (angle = 0 degrees). Additionally, my time of flight sensor is mounted 4 cm from the center (origin) of the car, at the cardboard bumper on the front at an angle of 0 degrees. I converted the measurements from the distance sensor to the inertial reference frame of the room later on in the lab, knowing dx, dy, and dz from my manual measurements. I plugged in roll and pitch to be 0, while I knew yaw from the gyroscope

When collecting measurements, I manually placed the robot in 3 known poses to the origin of my map (which I defined as one of the corners of my map. I think these three poses were sufficient to map out the entire area thoroughly. Additionally, I started the robot in the same orientation for all my scans in order to make it easier on myself when combining map data from all three points. Also, a helpful thing to have is very long flexible micro-USB cable so that you don't have to keep plugging it in/out of the robot when you keep updating your Arduino IDE code and are instead able to just keep running the code.

I had a polar coordinate plot for each of my poses, and also repeated the first scan in the same position twice, which led me to conclude that the TOF sensor along with the PID controller can lead to pretty reliable and consistent data. My three scans are shown below, with the first one showing that the scan is very repeatable.

Position 1:

Position 2:

Position 3:

My code for getting these polar coordinate plots basically consisted of using the yaw angle and converting that to radians, as well as applying my offsets to the poses so I knew where they were relative to the origin of my map. I was then able to plot these tables of points as points in a polar coordinate system, leading to the images above.

I measured the offsets from my defined origin for each of my pose positions and inserted them into my calculations for a complete graph of the area to get a scatter plot of my room.

The blue is from my first measurement, the green is from my second, and the red is from my last pose. As seen, this is very similar to the actual map, shown below:

I was then able to convert my data into a format that I could use in the simulator. I did this by guessing where the actual walls/obstacles were based on my scatter plot and then drawing lines on top of those, saving two lists of the endpoints of these lines as shown below.

Here is my scatter plot with lines on top to estimate walls/obstacles

I wrote the code for my start points like:

start_points = np.array([[-1.63, -1.51],

[-1.05, -1.51],

[-1.05, -2],

[0.75, -2],

[0.75, -1.7],

[1.2, -1.7],

[1.2, 0.02],

[-0.18, 0.02],

[-0.18, 0.22],

[0.13, 0.22],

[0.13, 1.60],

[-1.00, 1.60],

[-1.95, 1.38],

[-1.95, 0.43],

[-1.57, 0.00],

[-1.57, -0.35],

[-1.63, -0.35],

[-0.95, -0.35],

[-0.68, -0.35],

[-0.68, -0.35],

[-0.95, -0.35]

])

end_points = np.array([[-1.05, -1.51],

[-1.05, -2],

[0.75, -2],

[0.75, -1.7],

[1.2, -1.7],

[1.2, 0.02],

[-0.18, 0.02],

[-0.18, 0.22],

[0.13, 0.22],

[0.13, 1.58],

[-1.00, 1.60],

[-1.95, 1.38],

[-1.95, 0.43],

[-1.57, 0.00],

[-1.57, -0.35],

[-1.57, -0.35],

[-1.57, -1.51],

[-0.68, -0.45],

[-0.68, 0.06],

[-0.95, 0.06],

[-0.95, -0.45]

])

Opening up lab7b.ipynb, I could then visualize my data in the plotter:

In terms of how to pair down readings when I have more than the 18 readings that the simulator expects, I would probably use averaging by dividing my total samples by 18 and averaging those values together. I would also weight the middle data value for each group more. For example, if I had 90 readings (leading to 5 measurements to average together for each of the 18 readings), I would weight the middle value more, since this should be the value at the center of that angle range. I think averaging helps you with any inaccuracies in terms of the exact angular position by incorporating neighboring values, though weighting the middle should give more significance to the value that should be the closest to the actual physical value.

Grid Localization Using Bayes Filter

The objective of the lab was to implement grid localization using the Bayes filter method.

I downloaded the lab8 base code and followed the setup instructions like the previous labs. I also used the lab8-manager to start the simulator ("a" and "s") and the plotter ("b" and "s"), and I also started the Jupyter server in my VM from the work directory.

In terms of my implementation, before I attempted to cut down on computation time, I realized that the many iterations of the bayes filter took a very very long time and a lot of patience (though I did do quite a few psets and discover a lot of good music while waiting for my code to finish running). Because of this, I knew that I needed to write efficient code so I implemented some of the tips stated in the lab, which I'll get into below.

My Bayes filter functions relating to the prediction step are shown below. I kept most of my functions the same as Lab 6, though I fixed a few mistakes I made before. In the compute_control function, I computed the

rot1, trans, and rot2 info needed for the odometry model from the current pose and previous pose. I also normalized the angles to be between -180 and 180 degrees. My odometry model

then utilized this function by returning the probability of the current pose given the previous pose and control data. I found this by using the Gaussian function with the rotation

and translation sigmas given. I was then able to use these in the prediction step to find the prior belief, only computing the values if the probabiility of a state was greater than 0.0001 (to cut down

on computation time). Because fo this, I also had to normalize my probabilities at the end of the loop to account for the skipped steps, using numpy to calculate the sum of all my loc.bel_bar values in the function.

In particular, this step was done with loc.bel_bar = loc.bel_bar / np.sum(loc.bel_bar)

from tqdm import tqdm

# In the docstring, "Pose" refers to a tuple (x,y,yaw) in (meters, meters, degrees)

# In world coordinates

def compute_control(cur_pose, prev_pose):

""" Given the current and previous odometry poses, this function extracts

the control information based on the odometry motion model.

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

Returns:

[delta_rot_1]: Rotation 1 (degrees)

[delta_trans]: Translation (meters)

[delta_rot_2]: Rotation 2 (degrees)

"""

x_delta = cur_pose[0] - prev_pose[0] # x of current pose - x of previous pose

y_delta = cur_pose[1] - prev_pose[1] # y of current pose - y of previous pose

delta_rot_1 = loc.mapper.normalize_angle((math.degrees(math.atan2(y_delta, x_delta))) - prev_pose[2]) # initial rotation

return delta_rot_1, math.sqrt(x_delta**2 + y_delta**2), loc.mapper.normalize_angle(cur_pose[2] - prev_pose[2] - delta_rot_1)

# In world coordinates

def odom_motion_model(cur_pose, prev_pose, u):

""" Odometry Motion Model

Args:

cur_pose ([Pose]): Current Pose

prev_pose ([Pose]): Previous Pose

(rot1, trans, rot2) (float, float, float): A tuple with control data in the format

format (rot1, trans, rot2) with units (degrees, meters, degrees)

Returns:

prob [float]: Probability p(x'|x, u)

"""

(uRot1, uTrans, uRot2) = u

(pRot1, pTrans, pRot2) = compute_control(cur_pose, prev_pose)

prob = loc.gaussian(uRot1-pRot1, 0, loc.odom_rot_sigma) * loc.gaussian(uTrans-pTrans, 0, loc.odom_trans_sigma) * loc.gaussian(uRot2-pRot2, 0, loc.odom_rot_sigma)

return prob

def prediction_step(cur_odom, prev_odom):

""" Prediction step of the Bayes Filter.

Update the probabilities in loc.bel_bar based on loc.bel from the previous time step and the odometry motion model.

Args:

cur_odom ([Pose]): Current Pose

prev_odom ([Pose]): Previous Pose

"""

# (x,y,a) = loc.mapper.to_map(xt) # passing in current position as parameter

# loc.bel_bar[x][y][a] = (0,0,0) # sum = 0 at first

u = compute_control(cur_odom, prev_odom)

for xc,yc,ac in tqdm(np.ndindex(loc.bel.shape)): # all possible past positions

for xp,yp,ap in np.ndindex(loc.bel.shape):

if (loc.bel[xp][yp][ap] > 0.0001 ):

loc.bel_bar[xc][yc][ac] += odom_motion_model( loc.mapper.from_map(xc,yc,ac), loc.mapper.from_map(xp,yp,ap), u ) * loc.bel[xp][yp][ap] # add to sum for the xt

loc.bel_bar = loc.bel_bar / np.sum(loc.bel_bar)

# print(loc.bel_bar[xc][yc][ac])

In terms of the update step and the sensor model, I basically kept my code the same as Lab7. For the sensor model, I used the true view (from the function get_views) and also the data that the robot obtains by spinning in a circle (and collecting 18 data points). I was then able to compute the probability of z|x using the Gaussian function of the difference between the observation data and the true view, given a mean of 0 and a SD of sensor_sigma. The update step involved using the sensor model and the prior belief to find the belief, updating the values in the belief array. I also had to normalize this to ensure that all values in loc.bel summed up to a probability of 1.

def sensor_model(obs, xt):

""" This is the equivalent of p(z|x).

Args:

obs ([ndarray]): A 1D array consisting of the measurements made in rotation loop

Returns:

[ndarray]: Returns a 1D array of size 18 (=loc.OBS_PER_CELL) with the likelihood of each individual measurements

"""

(cx,cy,ca) = xt

views = loc.mapper.get_views(cx,cy,ca) # true view

return loc.gaussian(obs - views, 0, loc.sensor_sigma)[ca]

def update_step():

""" Update step of the Bayes Filter.

Update the probabilities in loc.bel based on loc.bel_bar and the sensor model.

"""

# update for state xt with measurement z